ARTIFICIAL INTELLIGENCE, PROMISE OR PERIL: PART 3 – AI GOVERNANCE AND VENTURE CAPITAL

by Dr. James Baty PhD, Operating Partner & EIR and and Supreet Manchanda & Paul Dugsin, Founding Partners

RAIVEN CAPITAL

This is our third release in our series on governing

Artificial Intelligence – AI Promise or Peril

This release consists of three sections

AI Impact on Business & Markets

The first release on AI Ethics is available here.

The second release on AI Regulation is available here.

In our inaugural episode of our ‘AI, Promise or Peril’ series, we delved into the clamor surrounding Artificial Intelligence (AI) Ethics—a field as polarizing as it is fascinating. Remember the Future of Life Institute’s six-month moratorium plea, backed by AI luminaries? Opinions ranged from apocalyptic warnings to messianic proclamations to cries of sheer hype.

In our second episode, we examined the chaos around the emerging AI Regulation, a cacophony of city, state, national, and international regulatory panels, pronouncements, and significant legislative and commission enactments. We examined the EU AI Act, and the US NIST AI Risk Framework amongst key models. We suggested there was a strong case for a US Executive Order on AI based on the NIST AI-RMF. Keep in mind that the US Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence was issued by President Biden on October 30th 2023. In addition, the United Nations announced in October the creation of a 39-member advisory body to address issues in the international governance of artificial intelligence.

Our blog posts observed that the emerging solution to AI governance is not one act or law; it encompasses corporate self-governance, industry standards, market forces, national and international regulations, and especially AI systems regulating other AI systems. AI governance impacts not just those developing AI projects, but also those leveraging existing AI tools. Navigating this terrain involves a complex interplay of legal regulations and voluntary ethical standards. Whether you’re at the helm of an AI project or leveraging AI tools developed by others, a complex web of ethical and regulatory issues awaits.

RAIVEN’s “VC” PERSPECTIVE

Before we begin, let’s mention Raiven Capital’s unique viewpoint:

We’re not in the business of chasing far-fetched dreams. Our game is more grounded – Raiven steps in when seed phase is over and MVP is ready, with a keen eye on substantial returns within a five-year window. Where building bridges to capital, knowledge and markets is key to business success.

Our arena? It’s at the forefront of the fifth industrial revolution. Imagine scenarios where technology is not just an aid but an integral part of the human work environment, tackling the real issues plaguing our era and brewing next-gen operational solutions. In this paper we address both the larger issues of the industry in general, but also focus on what’s primary in our strategic focus.

AI IMPACT ON BUSINESS AND MARKETS

Before we go into specific ways AI governance impacts venture capital, let’s consider the broader implications of AI on the business world, particularly in the tech sector. Artificial intelligence isn’t just a buzzword or a trendy add-on in the tech industry, it is a revolutionary force.

Think about it: AI implementations are not just improving business processes; they’re creating entirely new business frameworks. The old ways of doing business? They’re being disrupted and turned on their heads. We’re witnessing a fundamental, potentially existential shift in how businesses operate and compete. For tech businesses, AI is not only a new frontier. It’s the difference between staying relevant or becoming obsolete. It’s not just about being smarter or more efficient. It’s about reimagining what’s possible, reinventing business models, and revolutionizing industries. In the AI era, adaptability, innovation, and foresight are the new currencies.

An Example: Is This the End of Internet Search?

To understand this, let’s consider a specific use-case that is dominating headlines: Is OpenAI / ChatGPT blowing up internet search business models? Google has long reigned supreme, owning 92% of the Internet search market (with Microsoft a distant second at 2.5%). This dominance extends to internet search advertising revenue, where Google commands an impressive 86%, outperforming all other companies in advertising earnings.

Of course, Google has long pioneered research into AI’s potential to enhance this market, but the company has approached its integration with caution. This conservative stance left a gap in the market – a window of opportunity to radically shake up the market with the introduction of ChatGPT. In parallel was the investment by Microsoft into OpenAI and Marc Andreessen opining on the Lex Fridman podcast as to whether this is the end of Internet Search as we know it.

The overall market threat is twofold:

First, a paradigm shift to the current advertising model: If internet search evolves from the traditional listing pages of ten websites, interspersed with advertising, to having the chat interface of the service simply provide ‘the answer,’ then the opportunity to place advertising is notably reduced.

Secondly, disruption of the website business model: If search just returns an answer and no website listing, the larger implication is profound. The conventional website business model, which has been the mainstay for over 25 years, faces significant disruption. The traditional storefront and public relations image, represented by a website, and its value as an internet face for all businesses could be radically diminished or rendered obsolete.

However, it’s essential to consider the concurrent emerging advancements in user experience architecture. Taking the example of the Bing/Edge interface developed by Microsoft, which integrates AI tools within a broader web window design, we observe a strategic incorporation of generative chat functionalities. This approach does not merely deliver answers, it also connects users to relevant articles and webpages that inform those answers, simultaneously creating new opportunities for advertising placement. Such an enriched environment, where generative AI and conversational prompting emerge as key components of the interface, is set to redefine our interaction with internet resources.

And the players? Where Microsoft was facing a continuously declining share of Internet search traffic and advertising revenue, it now has become a player in defining the next internet interface with its investment in OpenAI. Clearly, this is not the end for Google. It has already released its Bard interface using its LaMDA LLM generative AI technology. And it still has volumes more user data and subscribers than its closest competition, but this may be the moment that classical ‘internet search’ and its integrated advertising model ‘jumped the shark’.

In summary, while the fundamental primitives of search, advertising, and the Web will persist, their operational dynamics are poised for significant change. Emerging trends suggest a transition from reliance on user-generated keywords to more sophisticated AI-driven guidance, enabling a more anticipatory and personalized user experience. This marks a significant evolution in the top-level user interface of the application stack, heralding a new era in digital interaction and information retrieval. Personalization and AI agents will be the new top UI level of the application stack.

Insights for Investors / VCs: AI will notably disrupt search, advertising and web presence.

There are loads of ways in which AI is impacting business, and it would be a dissertation to begin to examine all of them here, so let’s leave this one example as to why understanding the business impacts of AI is so important to venture capital.

AI is creating huge opportunities, AI businesses, and businesses leveraging AI, that impact competitive market structure, and profitability. The use of AI amplifying human capital will be a key component of transforming entire industries in the fifth industrial revolution. This idea is as big as any of the previous industrial revolutions — the contribution of artificial intelligence, and how it will reshape businesses, moving them to unprecedented levels of autonomy by integrating decision making into processes leading to higher potential profitability. While paradoxically it squeezes profit by the result of the competitive use of the technology, AngelList has reported midyear that “AI deal share has increased more than 200% on AngelList in the past year, even while the venture market is down 80% in 2023”.

AI is hot!! It is critical to understand the transformative issues of AI and other IT technology on the business landscape and the economy, especially when evaluating and judging and investing in VC opportunities. Clearly AI is driving a wave of new startups.

Insights for Investors / VCs: Look for even more disruptive opportunities.

AI IMPACT ON VC OPERATIONS

AI Fintech is Improving Basic VC Operations

How will artificial intelligence impact the venture capital industry? It already has. Virtually every tool, resource, support component and activity in venture capital already has AI integrated into it. AI’s integration is far from superficial, it is foundational. Fundamental tools and resources pivotal to VC operations, like Pitchbook and Carta are rapidly expanding their utilization of AI technologies. This isn’t just a trend riding the wave of ChatGPT’s media frenzy, it’s an ongoing strategic evolution.

Venture capital fintech firms have been embedding AI into their frameworks for years, subtly yet significantly altering the industry’s anatomy. It’s not about replacing human decision-making but augmenting it with data-driven insights. This adoption extends beyond basic functionalities, marking a paradigm shift in how VC firms operate.

To understand the depth of AI’s impact on the VC landscape let’s consider the key sectors where AI is transforming VC, and examine specific instances and the aspects of the VC process they influence.

At the top level, we can categorize the significant impact of AI on the VC process into three core areas:

Enhancing the deal sourcing and due diligence process: AI can help VC firms find and evaluate potential investments by analyzing large amounts of data, such as market trends, company performance, social media sentiment, and customer feedback. AI can also help VC firms reduce the risk of bias and human error in their decision making.

Providing personalized and data-driven advice to portfolio companies: AI can help VC firms provide better support and guidance to their portfolio companies by leveraging data and analytics to generate insights and recommendations. AI can also help VC firms monitor the performance and health of their portfolio companies and identify potential issues or opportunities. Improving the efficiency and transparency of the VC industry: AI can help VC firms improve their internal operations and processes by automating tasks, such as reporting, accounting, and compliance. AI will help VC firms increase their transparency and accountability by providing clear and consistent communication and feedback to their stakeholders, such as investors, founders, and regulators at scale.

Specific Fintech Applications of AI in VC

To understand how broad and deep this revolution is, let’s list a few significant specific ways in which AI is being used in the improvement of venture capital processes and operations.

Deal sourcing: AI can be used to identify promising startups and entrepreneurs before they become widely known, giving venture capitalists an early advantage. This can lead to more lucrative investment opportunities.

- AngelList uses AI to identify promising startups and entrepreneurs before they become widely known.

- Crunchbase provides a database of startups and entrepreneurs that can be searched using AI to identify promising investment opportunities.

Due diligence and risk assessment: AI algorithms can analyze large amounts of data, including financial statements, market trends, and social media sentiment, to identify potential risks and opportunities in investment prospects. This can help venture capitalists make more informed decisions and avoid costly mistakes.

- SignalFire uses AI to analyze company data, news articles, and social media to identify potential risks and opportunities for venture capitalists.

- Databricks provides a cloud-based platform that allows venture capitalists to analyze large amounts of data to identify trends and patterns that may indicate a promising investment opportunity.

Predictive analytics: AI can be used to predict the future performance of companies, helping venture capitalists identify promising investment opportunities early on. This gives them a competitive edge and increase their chances of success.

- AlphaSense uses natural language processing (NLP) to analyze company filings, news articles, and social media to predict a company’s future performance.

- CB Insights uses machine learning to identify companies that are likely to be acquired or go public.

Regulatory compliance: AI can help venture capital firms comply with complex regulatory requirements by automating tasks such as KYC/AML checks and reporting. This can save time and money and reduce the risk of fines or penalties.

- RegTech Solutions uses AI to automate KYC/AML checks and reporting for venture capital firms.

- ComplyAdvantage provides a cloud-based platform that helps venture capital firms comply with complex regulatory requirements.

Data analytics and visualization: AI can help venture capitalists make better decisions by providing them with insights from large datasets. This can include things like market trends, competitor analysis, and customer behavior.

- Tableau provides a platform that allows venture capitalists to visualize and analyze large datasets.

- Qlik provides a platform that allows venture capitalists to create interactive dashboards that can be used to track market trends and competitor analysis.

Fraud detection and prevention: AI can be used to detect fraudulent activities in real time, protecting venture capital firms from financial losses.

- Recosense provides various AI fraud detection solutions such as the ability to detect fake financial statements.

Portfolio management: AI can help venture capitalists optimize their portfolios by identifying overvalued or undervalued assets and suggesting rebalancing strategies. This can help them maximize returns and minimize risk.

Chatbots and virtual assistants: AI-powered chatbots and virtual assistants will provide 24/7 customer support and answer frequently asked questions, freeing up humans to focus on more strategic tasks.

OK, you get it, AI is everywhere. Above are just a few examples of how AI is being used to improve venture capital, but it’s clear that AI is becoming pervasive in the platforms servicing the Fintech / VC industry. Much of this is from traditional symbolic AI (structured data / rule-based systems / expert systems), but generative AI (unstructured data / text generation / machine learning) is also fast emerging in VC tools.

Insights for Investors / VCs: Look for even more disruptive opportunities.

Some Emerging Specific Generative AI uses at Raiven Capital.

While much of the existing current AI applied to fintech and the VC industry is classical symbolic AI, there is of course a great deal of recent interest in generative AI. At Raiven, for example, here’s three different use cases of how we are experimenting with generative AI:

1. Document editing

Using (private / non-saved) generative chat products (ChatGPT, Bard, Edge, etc) to help edit text and documents. This is primarily in two areas:

- Selective editing of text for consistent smoothing of content tone (not to generate content):

This involves providing the LLM examples of the persona desired and related prompt engineering. It also generally involves integrating multiple passes of the review.

- Generation of graphics to illustrate key points.

2. Doing founder background / market analysis / research

While key founder background can be found from general internet resources and especially from many of the tools mentioned above in the VC fintech review, it is also proving useful to use prompt engineering to provide more focused founder background / market analysis / research.

3. Coachability scenario development

We are considered active investors, connecting our founders with a diverse set of connections and resources, bridging them to markets and providing ‘coaching’ to help their success. As part of our process, we filter for founder’s desire to learn (coachability). So, a key component of our investment strategy emphasizes the following:

- Selecting founders for coachability.

- Providing more intimate hands-on guidance

- Avoiding common ‘anti pattern’ behaviors that are known failure generators.

We are experimenting with generative AI to quickly create rich scenarios illustrating recurring entrepreneurial patterns, or anti-patterns, to enrich the ‘coaching conversation’.In summary we believe in high touch investing, and we believe that AI willimprove the ability to deliver and scale that value to our founders.

Insights for Investors / VCs:

Like all 5IR, human + machine is offering improved VC ops. Including both symbolic and generative AI.

Will AI Kill Venture Capital?

Beyond efficiencies, many are suggesting that AI also has the potential to radically change the overall business of VC. Sam Altman has talked the end of the ‘venture capital industrial complex’. The idea is that major funds have grown too large, dominated by increasing ticket size, with too much focus on exits – manifesting a need to return to more innovation. A more extreme suggestion is that AI could replace the human VC function. Chamath Palihapitiya, CEO of Social Capital, and the face of the SPAC boom, proposed that there’s a reasonable case that the job of venture capitalist will cease to exist.

The key elements of AI disruption that Palihapitiya and Altman allude to include:

- overall move to the ‘creator’ economy

- based on smaller AI-centric startups (two founders plus some AI tools)

- funded by DeFi mechanisms.

Certainly these ‘forces’ exist. But to understand the current state of this evolution, let’s look at the recent impact of AI in basic investment structure. For now, it seems the new AI-controlled investment model seems long to takeoff:

- The WSJ reports that as yet, AI managed ETFs are lagging the market.

- Bloomberg reports that AI-managed funds are cool to actually investing in AI companies.

The VC market isn’t standing still but is seems more to be evolving to address the challenges, rather than disappearing overnight. A near-term AI-enabled shift may be increasingly focused on something in between, not the unchallenged domination of the traditional VC behemoths, but not yet replaced by DeFi financed two-person startups.

Perhaps there is an emerging middle ground:

- Lots of agile startups leveraging new tech

- Germinated in emerging zones of innovation (not limited to Silicon Valley)

- Smaller creator startups benefiting from more intimate hands-on VC guidance (what we emphasize at Raiven as ‘Bridges Plus Coaching’).

In Summary, there’s more of everything: Disruption, AI Startups, and Evolutionary VC Services.

As suggested in the first section, AI’s effect on business leads to dramatic productivity gains and will move toward millions of startups made up of teams of one or two. As noted before, pervasive AI tooling could significantly evolve the VC function. But in our opinion, this isn’t the end of the ‘VC industry.’

As AI technology continues to develop, we expect to see even more innovative ways in which AI is used to improve venture capital. This will help VCs make more informed decisions, increase their chances of success, and ultimately drive innovation in the fintech industry. Not an end to the VC industry, but a reinvigoration of the startup model. Note: Contrary to their predictions of failure and impending doom, Palihapitiya’s SPACs haven’t replaced IPOs and Altman is still starting new VC funds.

Insights for Investors / VCs:

Look for radical opportunities to improve scale, analytics, and involvement.

IMPACT OF AI GOVERNANCE ON VC

So, at a strategic level, what does this proliferation of AI and AI regulation mean for the business strategy and operations of the VC community? Be vigilant.

AI has become pervasive and ubiquitous. Artificial intelligence is being integrated into nearly every product or service, ranging from life-saving medical devices to self-checkout cash registers. In this landscape, it is crucial for VC investors to discern between valuable opportunities, less promising ones and pure hype.

AI safety has emerged as a critical consideration. Numerous well-established frameworks for AI ethics and principles exist. Companies heavily involved in AI technology should have their own set of AI principles, like industry leaders such as Google, IBM, Microsoft, and BMW.AI regulation is now a reality and is expected to expand further. From state-level agencies to nationwide or EU-wide regulations, and from agencies overseeing medical devices and consumer safety to labor practices, the regulatory landscape for AI is rapidly evolving.

AI regulation needs to be actively tracked. It is essential for VC firms engaged in significant AI development or exploration to assign someone responsible for closely monitoring these dynamic regulatory developments. Silicon Valley Bank’s failure serves as a cautionary tale. Operating without a Chief Risk Officer during a critical period of transformation in the technology and financial markets was a formula for disaster.

VC-Specific AI Governance

Clearly, as we have discussed in the last two episodes, ‘AI governance’ is moving full-speed ahead with ethical frameworks and legally-enforced regulations on AI business. And it’s clear that AI governance is also affecting the VC business. Similar to the Partnership for AI (focused on the major players in AI) which we discussed in the first episode of this series on Ethics (and who have just released their Guidance for Safe Foundation Model Deployment), another similar industry group focused on ethical AI has started. Responsible Innovation Labs has just launched a Responsible AI Commitments and Protocol, focused on startups and investors.

Forty plus VC firms pledged their organizations to make reasonable efforts to:

- Encourage portfolio companies to make these voluntary commitments (see the Protocol here).

- Consult these voluntary commitments when conducting diligence on potential investments in AI startups.

- Foster responsible AI practices among portfolio companies.

However, the RI Labs Protocol is not without some controversy, somewhat reminiscent of that Pause Giant AI Experiments open letter mentioned in the Ethics episode.

While RI Labs claims their Commitments and Protocol has been endorsed by the Department of Commerce (DOC), so far, they have only said; “We’re encouraged to see venture capitalists, startups, and business leaders rallying around this and similar efforts.” And, while RI Labs does count General Catalyst, Bain, Khosla, Warby Parker and other notable VCs amongst its members and signatories, The Information’s Jessica Lessin quotes Andreessen GP Martin Casado, who represents the vocal opposition (which also includes Kleiner Perkins partner Bucky Moore and Yann LeCun, Meta Platforms’ AI research chief ) as saying that, “…he could only think of two reasons why a VC would back the guidelines: They’re not a serious technologist or they’re trying to virtue-signal.”

Clearly the AI hype and governance battleground has reached the VC sanctuaries. While this provides plenty of PR fodder, it’s certainly true that the VC firms big and small are at the forefront of evaluating AI startups and opportunities, not only for financial risk, but also for ethical and regulatory risk. How should we organize – join PAI or AILabs, or develop our own guidelines – or all three? More than just guidelines, Andy McAdams of Byte Sized Ethics suggests creating our own AI Risk Scorecard, similar to the various AI indexes developed at Stanford HAI. Andy notes it may be difficult to find the data to evaluate and the emerging regulations will help, but they aren’t yet fully implemented.

Insights for Investors / VCs:

AI Governance: Ethics & regulation will affect almost all founders.

What are we doing at Raiven Capital? AI Proposal Risk-Triage

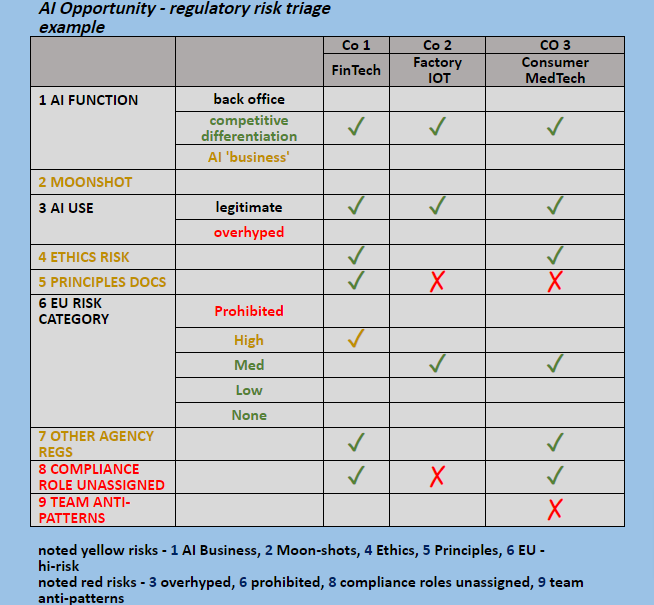

‘Commitments’ and ‘Manifestos’ are great for developers (and Founders should have them if they are doing serious AI dev), and an AI heavy VC may want to have its own ‘Principles’, but as a VC firm evaluating proposals, what are the key items to look for in evaluating the AI governance issues and risk?

AI GOVERNANCE – FOUNDER PITCH REVIEW QUESTIONS

Examples of specific considerations to look for in gating an AI-centric investment:

1 – What is the place of AI in business strategy?

Is AI a minor product feature, with pass through liability to an upstream core developer? Is original AI development part of the business? Or is this business itself a core AI product or service? In most of the existing regulatory frameworks this is an important consideration in the appropriate risk assessment.

A . If AI is a part of background operations, does it provide basic business efficiency?

- You’re probably just a user with application risk, but NOT responsible for the product.

B. If AI is a major competitive differentiator, is it core to the business model?

- There may be unique application product or service liability.

- Significant competitive shifts in the tech or its regulations may greatly impact business model success, market share or financial returns.

C. Is this an AI business?

- There may be unique application product or service liability.

- You’re a product / service creator.

- You likely have primary regulatory responsibility.

CONSIDERATIONS

- Obviously, AI product creators (C) have higher regulatory exposure.

- Competitive differentiators (B) should be concerned about product and data rights.

- Use of AI ‘foundation models’ may imply business risk.

- Private data models have more secure IP and clearer data rights.

- In all cases there may be ethical issues.

2 – Is this a ‘moonshot’ or ‘deep-tech’?

Lots of AI projects are ambitious and groundbreaking ideas or technologies with a longer time frame for development and commercialization, and higher business risk. Thus, they may not be appropriate for a shorter maturity portfolio strategy.

CONSIDERATIONS

- Operational AI is notably able to generate line of sight to positive cash flow, and clear exit strategies.

- Longer time frame speculative projects may be appropriate for very large multi-fund investment houses, or stand-alone mega projects, but of course imply greater business model risk.

3 – Is the use of AI legitimate and reasonable, or grossly overhyped?

Avoid investment opportunities that are exaggerating claims of AI in their product (note that this is a specific FTC red flag).

You’ve probably heard of the Turing Test. But have you heard of the ‘Luring Test’? The FTC is all over AI. In addition to the previous regulatory warnings on discrimination and unfairness, The FTC has explicitly warned against the “automation bias” potential of generative AI – ‘luring’ people with advertising disguised as truthful content. It is essential to track this source of regulatory risk.

CONSIDERATIONS

- While much AI regulation is emerging, Section 5 of the FTC Act prohibits unfair or deceptive practices.

- As noted in Episode 2, the FTC has announced it is focusing on preventing false or unsubstantiated claims about AI-powered products, and joined with the Civil Rights Division of the US DOJ, the Consumer Financial Protection Bureau, and the EEOC issuing a joint statement committing to fairness, equality, and justice in emerging automated systems, including those marketed as “artificial intelligence” or “AI.”

- There’s always hype in the tech biz, but there is a clear federal mandate to police excessive / unsubstantiated AI claims.

4 – Is this business subject to the ethical considerations of AI

(e.g., inherent bias or discrimination issues or potential). These should be understood and addressed.

CONSIDERATIONS

- Discrimination in many areas is strongly regulated without new legislation.

- Founders should consider:

- adopting one of the industries recommended ethical frameworks,

- developing one internally,

- and or joining the Partnership for AI.

- Investors may want to create stated ethical principles on AI investment.

- Using AI to address or prevent discrimination could be a key benefit.

5 – Is there an ‘AI Principles document’?

There are plenty of examples. If AI is a significant part of the product or service development, have a set of governing principles been adopted?

CONSIDERATIONS

If AI is a significant part of the business – competitive differentiation or an AI core product, it is essential to have statement principles. They are likely required under various regulatory components.

6 – What EU AI Act risk category is involved?

Even though it isn’t yet fully implemented, avoid unacceptable risk and consider the high risk category very cautiously (e.g., some high-risk areas such as life-critical medical applications, are already subject to clear guidance and regulations in the US and EU, and may be reasonable investments).

CONSIDERATIONS

There is enough preliminary data to know whether a proposed opportunity is likely to be categorized high-risk. Know your category!

7 – Is this business the subject of existing regulations that may be particularized for AI? For example, medical devices / FDA

CONSIDERATIONS

Don’t wait for the AI Act or the Executive Order to be in force. Many applications are already covered by existing regulatory agencies, especially in the US. Know your agency.

8 – Is there an AI compliance person?

If the use of AI is significant, does the firm have a person specifically identified to track and govern AI (and other) regulatory guidance? This could be a shared role, but it should exist.

CONSIDERATIONS

Whether it’s about data privacy, or AI regulations, or banks in Silicon Valley, you should have an active designated compliance person!

9 – Consider team dynamics and related anti-patterns

At Raiven Capital, we understand that a key element of investment success is to closely vet the founder’s team, not only core for competence and a strong sense of curiosity, but team chemistry that includes a capacity for coachability. We specifically analyze for recurring patterns and anti-patterns that help identify investment risk and understand the founders and the opportunity.

CONSIDERATIONS

- In IP heavy technology firms, especially including AI, there are a couple of not uncommon anti-patterns to be wary of. Watch out for:

- No IP strategy. Not every startup will have the funding to pursue patents early, but if there is significant IP there should be some form of IP strategy.

- IP self-dealing. At the opposite end are founders who, fully aware of the potential value of the IP, then attempt to segment and hide in another corporate entity. A ‘tech’ start-up should own its critical IP.

- In any case, realize AI is increasingly regulated, and this is a moving target. Stay informed and anticipate evolving regulations.

The purpose of these questions is classic VC deal-gating plus potential further action or coaching. The following table gives some hypothetical examples. At this point in the regulatory rollout and stage of use, such questions would be premature to use in a scored calculation. Questions are color coded to indicate levels of potential risk. Almost no question will be an absolute deal killer. Prohibited EU risk activity e.g. facial recognition is okay for limited nonpublic security applications. Other red items such as not having an assigned compliance [person can be fixed during company buildouts. The only real deal killer is overhyped AI. These are FTC and SEC red flags. In these examples, company one appears ready to go even though it maybe EU high risk. Company two and three have correctable items should they represent otherwise good investments. Some of these are interdependent. For example, if the company is an “AI Business,” they must have a ‘Principles’ document.

Insights for Investors / VCs:

Adopt AI governance ‘deal gating’ to understand potential regulatory implications.

A Strategy for ‘Now’: ‘Aligning’ the EU and US risk models

How to create or invest in a business today, while the implementations of the regulations are still to be determined? Answering the questions above can help by anticipating what regulatory categories the investment will likely fall into.

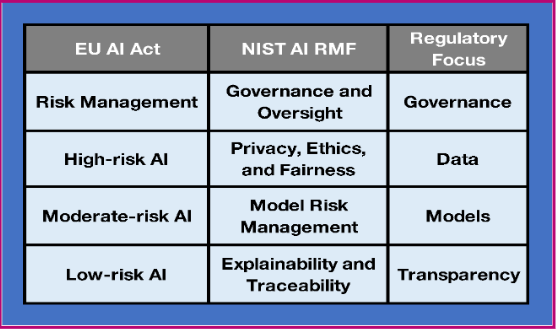

It’s possible, even necessary, to develop a preliminary strategy especially if thinking globally. The EU AI Act and the NIST AI RMF are the two key sets of guidelines that focus on ethical and responsible development and deployment of artificial intelligence.

While they share many common goals, there are some key differences between the two frameworks. that aligns with the proposed categories in and (the key framework of the US AI Executive Order), and to anticipate how they impact a specific start-up company’s use of AI and its likely regulatory compliance.

An individual founder may be subject to one or both. AI startups that want to operate globally can benefit by aligning their AI development and practices with both frameworks.

Here is a table that illustrates a high-level alignment of the approaches in the EU AI Act and the NIST AI RMF:

For example, the table above illustrates the different approaches:

- The overall Governance model strategy of the EU AI Act is ‘Risk Management’, while in the NIST AI RMF it’s more by ‘Governance and Oversight’.

- In the EU AI Act, the critical focus in High-risk AI is especially on the input / training data, and similarly NIST emphasizes the Data aspects of ‘Privacy, Ethics and Fairness’.

- Finally, the emphasis in Low-risk AI in both models is on basic Transparency, Explainability and Traceability – here it’s assumed the application is ‘OK’, but we want to be able to examine the results if there is some liability question.

Real applications of the regulatory approaches are much more detailed, and need to wait for their implementation, but the table suggests that there will be reasonable synergy. By understanding how these categories align, AI startups can develop a comprehensive approach to regulatory compliance, aligning principles and practices with the emerging regulatory focus.

Based on this concept of aligning the regulatory strategies, here are four specific things AI startups can do to align their AI development and practices with both frameworks:

1. Develop a risk management framework

Both the EU AI Act and the NIST AI RMF require AI developers to identify, assess, and manage the risks associated with their AI systems. AI startups should develop a risk management framework that is tailored to their specific AI products and services. This framework should include processes for identifying and assessing risks, as well as for implementing mitigation strategies.

2. Implement data privacy and security measures

Both the EU AI Act and the NIST AI RMF require AI developers to protect the privacy and security of the data that they use and collect. AI startups should implement data privacy and security measures that are appropriate for the type of data that they use. These measures should include safeguards against unauthorized access, data breaches, and discriminatory use of data.

3. Develop explainable and traceable AI models

Both the EU AI Act and the NIST AI RMF require AI developers to make their AI models explainable and traceable. This means that AI developers should be able to explain how their AI models make decisions and be able to trace the data that was used to develop and train their models.

4. Be transparent about AI development and deployment

Both the EU AI Act and the NIST AI RMF require AI developers to be transparent about their AI development and deployment processes. This means that AI developers should provide clear and accessible information about their AI products and services, including the types of data that they use, the algorithms that they use, and the risks that are associated with their AI products and services.

By aligning their AI development and practices with both the EU AI Act and the NIST AI RMF, AI startups can position themselves for global success while meeting the growing demand for responsible AI development and deployment. Both of these frameworks are under implementation, with many details of regulation emerging over the next year. So, the key strategy is to understand how a particular company might be categorized and affected, and to track the emergence of the specific regulations. And for investors to look for this strategy.

In summary, the human intuitive component is critical in evaluating startups, managing portfolios and coaching founders. Private capital in inherently nontransparent and emerging AI is even worse from a technical perspective. At some point with more regulation and transparency there will be a larger role for algorithmic evaluation. Right now, in the VC ecosystem it’s especially human experts aided by technology scale. VCs have checklists not algorithms as the data is too often unknown or fragmented. Further, the application of AI would be very subjective as each startup is unique. The coming AI regulations is the first step in providing better context.

Insights for Investors / VCs:

We’re in the emerging regulations phase – while details are to be determined. Start building a strategy now.

SECTION 4 – Key Outstanding Issues

Finally, before we leave you, let’s go back to the beginning. We started this series addressing ‘The Letter’… “Pause Giant AI Experiments: An Open Letter”. Crafted by the Future of Life Institute and signed by many of the AI ‘hero-founders,’ who warn of the existential risk of Artificial Intelligence. Emily Bender cautioned against ‘Longtermism.’ While ignoring real current issues, her comments reminded us of a suggestive of a cyclical pattern in technology adoption, where fear and hype (FOMO) are instrumental drivers of decision-making. At the same time, Hinton, Yudkowsky and others suggest we are potentially much closer to AGI and ASI (Artificial General Intelligence / Artificial Super Intelligence) than the previous consensus.

FOOM or FOMO?

Recently, this battle exploded onto our screens again, with a flood of insider leaks as to why the BOD of OpenAI fired Sam Altman, CEO of OpenAI, lost him to Microsoft, and then hired him back. And two more ‘letters’, one signed by the vast majority of OpenAI workers threatening to quit, and another rumored to explain the threat of Altman’s course of action. At the root of the existential angst, and the competition between the Big-Tech AI players, is the concept of FOOM. And this acronym has a mysterious double entendre …

– Fast Onset of Overwhelming Mastery – that once you cross some initial AGI trigger, ASI will come almost instantly.

– First Out Of-the-Gate Model – that the first to achieve near AGI will have an unassailable lead.

Those concerned with the existential risk of AI believe it may happen too fast to control, and those concerned with AI corporate success want desperately to be first. This leads us back to that other acronym mentioned in Episode 1 – FOMO, Fear Of Missing Out, from David Noble’s historical analysis of workplace / factory floor automation. Which one is it? FOOM or FOMO? Essentially both. There are real threats of moving too fast on AI, and there’s certainly a FOMO cloud of super-hype alluded to by Bender, the perceived threat of moving too slow.

Insights for Investors / VCs:

Understand the risk, beware of the hype.

Be Careful the Corporate Structure

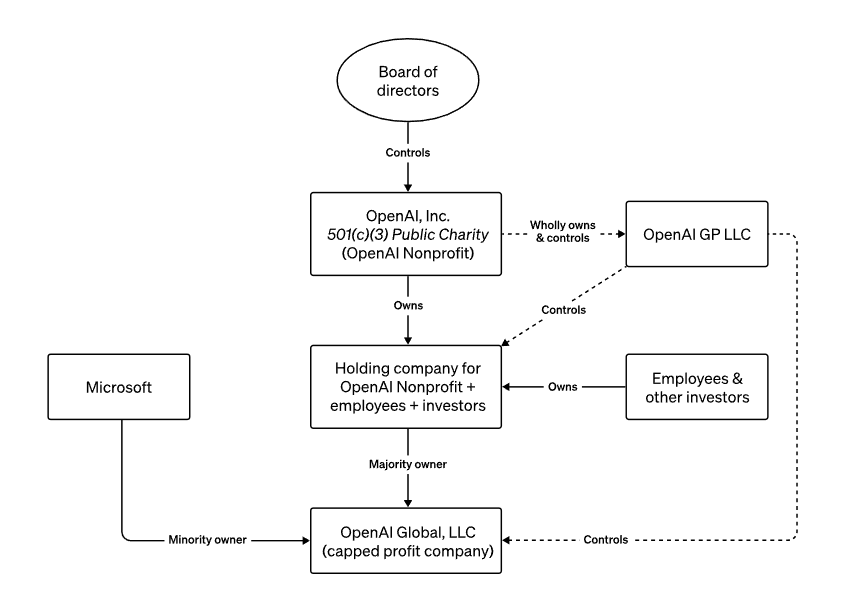

To address the existential risk of AI and to move as quickly as possible to commercialize, OpenAI evolved a complicated structure with a BOD overseeing a not-for-profit to watch over the corporate enthusiasm, like a built in red-team.

On their website, they state: “We designed OpenAI’s structure—a partnership between our original nonprofit and a new capped profit arm—as a chassis for OpenAI’s mission: to build artificial general intelligence (AGI) that is safe and benefits all of humanity.”We won’t try here to analyze the ‘existential threat’ / effective altruism arguments of ‘Altman was fired, he’s hired away, he’s back’ drama. For one detailed analysis of what went down from the perspective of trying to protect the world from ‘existential risk’ AI, see Tomas Pueyo’s post on his Uncharted Territories blog OpenAI and the Biggest Threat in the History of Humanity. But the key issue to point out here is if, as a VC / Investor, someone shows you an org chart like this, take note.

If a Board of Directors controls a Not-for-Profit (501C3), that controls a GP LLC, that controls a holding company and a separate capped-profit LLC, then you should really understand the governance risk and be prepared for chaos. The intended goal was to institute ethical non-profit control over an AI startup focused on commercial opportunities, and on the verge of existential risk. In the end it created headlines and may not have ultimately achieved the desired goal.

Insights for Investors / VCs:

Beware Russian-Doll corporate structures.

The Who and What Gets Regulated Challenge: GPAI / Foundation Models

Determining who should be regulated is a special challenge. The goal is to strike a balance between regulating AI to ensure safety without stifling innovation. Efforts are being made to allocate regulatory responsibility appropriately within the technology life cycle, limiting burdens on small innovative businesses while assigning primary liability to larger development institutions rather than individual users or smaller customers. Regulation aims to encourage innovation while ensuring safety.

There is a continuing argument by large General Purpose AI (GPAI) developers (e.g., OpenAI, Google Meta, Microsoft) that they are only building tools, should not be regulated and should be considered low risk. This contention was a major sticking point in the finalization of the EU AI Act. The resulting compromise is to try to regulate some level of associated intrinsic applications risk at the developer of the GPAI or what’s known as Foundation Models. A foundation model is a large-scale machine learning model that is pre-trained on an extensive dataset, usually encompassing a wide range of topics and formats. They include OpenAI’s GPT and DALL-E, and Google’s BERT (Bidirectional Encoder Representations from Transformers) and T5.

These models are designed to learn a broad understanding of the world, language, images, or other data types from this large-scale training. An MIT Connection Science Working Paper suggests that the market competition amongst the major foundation models is a major battle ground, similar to, but perhaps more important than the browser, social media and other ‘platform wars’ that preceded them. The Brookings Institution suggests the potential market for foundation models may encompass the entire economy, with high risks of market concentration.

Foundation Models are a regulatory challenge given that they are basic technology with many diverse applications. It’s typically the applications that are the regulatory risk, but owing to the scale, complexity, rapid technological advancement, lack of transparency and global reach, regulators have focused on how to assess the development, management and control the cumulative risk of these foundation models. This is both a scientific and regulatory challenge that will not be fully decided anytime soon.

The ‘good news’ on the self-regulatory front is that the PAI (Partnership for AI – which includes the majority of foundation model developers, e.g., OpenAI, Google, Microsoft, IBM, Meta…) has just released their ‘Guidance for Safe Foundation Model Deployment. A Framework for Collective Action’. This effort was started in April by PAI’s Safety Critical AI steering committee, and made up of experts from the Alan Turing Institute, the American Civil Liberties Union, Anthropic, DeepMind, IBM, Meta, and the Schwartz Reisman Institute for Technology and Society. While there are ‘longtermists’ and “effective altruism” advocates at PAI, PAI is more focused on immediate governance, best practices, and collaboration between different stakeholders in the AI ecosystem.

HOORAH! Substantive progress is being made on this important topic.

The ‘better news’ is that the group that is chartered to establish the EU framework for regulating foundation models has just passed a decision. In mid-October 2023, it was announced that EU countries were settling on a tiered approach to regulating foundation models – the bigger developers and projects would get more strict attention. Then, in mid-November, France, Germany, and Italy asked to retract the proposed tiered approach for foundation models, opting for a more voluntary model, and causing a deadlock that put the whole legislation at risk if not resolved. Finally, on December 9th the EU council presidency and EU Parliaments negotiators reached a provisional agreement – including specific cases of General Purpose AI (GPAI) systems, and a strict regime for high impact foundation models. They also mandated an EU AI office, AI board and stakeholder advisory forum to oversee the most advanced models.

The ’surprisingly encouraging news’ is that there is uncharacteristic agreement emerging between the US and EU implementation. As noted elsewhere in this series, many of these regulatory points are highly technical, not encouraging when dealing with politically charged issues. Because the US Executive Order uses a jurisdictional entry point of ‘dual use’ (militarily significant) technology, it has emphasized defining this cutoff point. Following President Biden’s Executive Order approach, the EU high-risk foundation model definition will similarly apply to models whose training required 10^25 flops of compute power – the largest LLMs. This level of global regulatory alignment at this stage of technology development is unprecedented and encouraging. There are many other similar points of technical alignment between the US and the EU. Most big-tech companies will be disappointed at the strong regulation, but reluctantly happy that it is globally consistent. Of course, tech like synthetic training and quantum computing, will shift any computational definition of high-risk, but the mechanism is in play to define that current relevant ‘speed-limit’.

The ‘bad news’ – a study performed by the Stanford University Center for Research on Foundation Models (CRFM) assessed the 10 major foundation model providers (and their flagship models) for twelve key EU AI Act requirements, and finds that none of these popular foundation models comply at this time with the preliminary AI Act rules on foundation models.

Insights for Investors / VCs: Closely Track the Regulation of 'Foundation Models.'

- Understand the regulatory risks of developing ‘foundation models’

- Understand the licensing risks of using ‘foundation models’

- Understand the commercial dependencies implicit in ‘foundation models’

Explainable AI – XAI

As we mentioned in Episode 1, the key difference mentioned by Floridi and Cowls between AI and previous technology is “Explicability: Enabling the Other Principles through Intelligibility and Accountability”. This is what’s referred to as XAI – Explainable Artificial Intelligence. XAI refers to the ability of AI systems to provide explanations for their decisions. However, current generative language models like ChatGPT, like most machine learning (LM) often struggle to offer explicit explanations for their responses due to the nature of their training.

“As a language model, I generate responses based on patterns and information learned during the training process, but I do not have the capability to provide explicit explanations for how I arrive at a specific answer. I do not have access to my internal processes or the ability to trace and articulate the specific reasons behind each response.” ChatGPT3. So, in effect these LLMs, like most Machine Learning AI, fail one of the near universally agreed on principles of AI – Accountability / Explainability. Research published by the Stanford Center for Research on Foundation Models (CRFM) and Stanford Institute for Human-Centered Artificial Intelligence (HAI) offers a Foundation Model Transparency Index and concludes that “the status quo is characterized by a widespread lack of transparency across developers”, and that transparency is decreasing.

Insights for Investors / VCs:

It’s an open question as to whether AI itself might ‘police’ AI.

Can AI regulate AI?

The concept of using AI to regulate AI is a significant challenge, not relying on guiding principles in its development, or in legal arbitration after a failure, but use AI real time to regulate AI. This concept has been advanced by, among others, Bakul Patel, former head of digital health initiatives at the FDA and current head of digital health regulatory strategy at Google. Patel said recently “We need to start thinking: How do we use technology to make technology a partner in the regulation?”.

Notably Google’s Principles of AI (like many of the core developers) specifically calls out accountability: “Be accountable to people. We will design AI systems that provide appropriate opportunities for feedback, relevant explanations, and appeal. Our AI technologies will be subject to appropriate human direction and control.”

Of course, there are special ethical challenges with this suggestion, but the fundamental issue is we can’t scale the human regulatory oversight for manual approaches.

Insights for Investors / VCs:

It’s an open question as to whether AI itself might ‘police’ AI.

Overall Conclusion: Stay Vigilant

The governance of artificial intelligence – from the standpoint of technology, organization, ethical frameworks and the regulation – is in constant flux. The major lesson is that governance of AI in relation to the venture capital ecosystem is a moving target.

It’s not possible to just create one framework, follow one set

of regulations, or analyze just one opportunity. The nature of this radical entrepreneurial time in our techno-history is a need for constant vigilance by technologists, the regulators, and investors.

What's Next? Quantum AI?

Finally, before we leave you, let’s go back to the beginning, when we discussed the impact that LLM generative AI ChatGPT chatbots will have on the Internet search industry, advertising and website placement, etc.

Clearly, artificial intelligence has been at a major pivot point. This inflection point was the cause for a ‘red alert’ at Google which focused all its energy on responding to this competitive issue. There are two potential additional variables that may radically affect the current AI-wars: synthetic training and quantum computing.

Much of the recent discussion on more rapid AGI/ASI threats is the move toward synthetic training of generative AI. Training Machine Learning models is time consuming and costly in data rights, as well as server and energy costs. But what if you don’t need real data? Speeding up and lowering the cost to train these models could dramatically increase their speed. Microsoft has recently released data on their Orca2 project which uses synthetic training. This could reduce the data lead that Google has over Microsoft.

Another major innovative technology that represents a potential existential challenge is quantum computing. Training foundation models of machine learning takes huge amounts of computing power (this is often cited as a regulatory trigger). Here, too, Google is an innovator.

The Google Sycamore project is a significant practical application of quantum computing technology, reducing the computational resources for training and parallelizing the work load to computationally address, significant problems, challenges, and opportunities in the world today. And IBM just released their new Quantum System2.

If you want to look into the future and freak out over the risk and opportunity associated with Skynet, the Terminator, Robocop, etc, then consider the FOOM impact of synthetic training and quantum computing on accelerating artificial superintelligence (ASI). The big tech players positioned to combine AI with quantum are the same previously discussed internet search / AI competitors -Google and Microsoft, plus IBM and Alibaba.

Insights for Investors / VCs: The VC ecosystem provides the innovation engine for the future.

New ParagraphBusinesses that accelerate the combined revolution of new technologies, i.e., artificial intelligence and quantum computing, will provide the impetus for even larger radical changes in the tech business and the economy in general, and these will come from the venture capital landscape.

While many of the startups like OpenAI and DeepMind, may eventually be acquired by Big Tech, innovation is still the primary domain of startups and venture capital, and the entrepreneurial spirit of the modern fifth industrial economy.

In Summary,

The VC community needs to stay vigilant and proactive in navigating the complex and evolving terrain of AI. By monitoring AI regulation, embracing safety considerations, and making informed investment decisions, VC investors can position themselves for potential success in this rapidly advancing field.