Thesis: The decisive driver of mass AI adoption won’t be model size or compute—it will be architecture: resilient, modular, interoperable systems that embed intelligence into the core of how business operates.

Introduction: The Threshold of a Revolution

Welcome to the moment when AI stops being a dazzling demo and starts running your business. You’ve heard about ever-bigger models and faster GPUs—but the true revolution isn’t raw intelligence; it’s the infrastructure that tames it. Just as Windows made the PC usable and the App Store unlocked mobile, a new layer of agentic, multi-model architecture is making AI not just conceivable, but compulsory.

We’re moving from standalone LLMs to agentic systems—distributed, role-based, workflow-aware architectures that can reason, retrieve, plan, and integrate. This is not a feature race. It’s a platform transition.

You Can’t Afford to Skip This:

- High Stakes, High Reward: Companies that harness resilient, modular AI platforms today will outpace—and outlast—those glued to standalone LLMs, or even worse … no AI.

- Practical Playbook: This blog is more than theory. It’s a structured, two-part guide packed with deep-dive analysis, real-world examples, and “Raiven Take-Aways” you can apply immediately and return to as both primer and playbook.

Come explore the Agentic Era—where models become modules, and AI becomes infrastructure. The format is a ‘pillar blog’ - a longish deep dive overview of the topic, laden with examples and references.

In Section 1, we’ll show how agentic, multi-model AI, with “Augmented Humanity” is the next business infrastructure

In Section 2, you’ll find how “Context Engineering” is the next execution architecture (the emerging way of designing dynamic AI workflows)..

SECTION ONE: From Innovation to Integration

1. From Survival to Superstructure: AI Reinvents Business

The real AI tipping point isn’t a new model—it's a new architecture.

Every breakthrough technology—PCs, the internet, the cloud—only scaled once a full system architecture emerged: components, services, and integration patterns. We’re now reaching that phase with AI.

An architectural layer is forming—one that will allow companies to augment existing workflows, transform operations, and even reinvent value chains.

Four paths are emerging:

- AI-Augmented: Traditional workflows enhanced with tools like copilots and classifiers.

- AI-Transformed: Businesses rewired end-to-end around AI-centered processes.

- AI-Native: Startups born with orchestration, agents, and model selection at the core.

- AI-Absent: Organizations that opt out—and become irrelevant.

This isn’t about trend-chasing. It’s structural adaptation.

- AI-Augmented will become baseline.

- AI-Transformed will compete.

- AI-Native will lead.

- AI-Absent will vanish.

The connective tissue among all viable paths is clear: Augmented Humanity. Not automation for its own sake, but tools that expand human creativity, judgment, and capability.

- Augmented firms improve how people do familiar work.

- Transformed firms redefine what “work” even means.

- Native firms invent entirely new categories of labor and value.

But none of this is possible without architecture. Demos don’t scale. Platforms do.

Architecture is the tipping point—just as HTML was for the web, Docker for the cloud, and the App Store for mobile.

2.Architecture Drives Technology Revolutions in Business

Every major tech wave follows the same arc:

- Innovation sparks breakthroughs—powerful but fragmented.

- Architecture imposes structure—interfaces, patterns, abstractions.

- Adoption follows—when systems become usable, composable, and economically viable.

This isn’t a trend. It’s a law of diffusion.

- Everett Rogers’ Diffusion of Innovations mapped this in the S-curve of innovation adoption.

- Geoffrey Moore’s Crossing the Chasm : adds breakthroughs don’t reach the mainstream without reliable, repeatable architecture

- Carlota Perez’s Technological Revolutions and Financial Capital: showed that real deployment—when revolutions transform society—requires institutional and infrastructural alignment.

AI is entering the architecture phase right now.

Foundation models are powerful—

but raw. They hallucinate.

They forget.

They’re not

interoperable or explainable. They’re not infrastructure. They’re ingredients.

The real breakthrough is architectural ecosystem:

- Connectors, contracts, and orchestration.

- Memory and modular reasoning.

- Standardized protocols and system-level transparency.

This enables business connected in this emerging ecosystem to better leverage AI.

RAIVEN TAKE-AWAY

Don’t just

focus on LLM features —

focus on the platform

architecture ecosystem.

Architecture is the

multiplier.

3. A History of Tech Revolutions

Technology doesn’t scale on breakthroughs alone—it scales when it gets an operating model.

The pattern is remarkably consistent:

- Breakthroughs emerge—powerful, isolated, experimental.

- Architecture arrives—codifying protocols, standards, and integration layers.

- Adoption accelerates—because the tech becomes usable, scalable, and trustable.

Moore’s Law drove the raw acceleration of compute. But adoption? That came from resolving the friction—organizational inertia, compliance, integration complexity. And that friction is only tamed through architecture.

Let’s look at the inflection points:

- PCs: 1977 (Apple II) → 1995 (Windows 95 + LANs) Personal computing mattered once it networked and scaled inside business.

- Internet: 1990 (WWW) → 2005 (SaaS, broadband, secure payments) The web became economic infrastructure in the 2000s, not the ’90s.

- Mobile: 1983 (1G) → 2008 (iPhone + App Store) Mobile transformed work only once smartphones met developer platforms.

- Cloud: 2006 (AWS) → 2020 (Kubernetes + CI/CD) The cloud scaled when containerization solved deployment at scale.

In each case, architecture wasn’t optional. It was the unlock. And in each case, while the tech is cycling faster, it still takes about 5 years to absorb a tech revolution into broad base business practice. It’s all about culture, training, ecosystems…

Now it’s AI’s turn. The architecture moment has arrived.

RAIVEN TAKE-AWAY

Understand the

historical

cadence of

infrastructure

tipping points.

That’s where the

opportunity

lives.

4. The Architecture Gap in AI Today

Foundation models have delivered the “wow”—but not yet the full “how.”

LLMs / Foundation Models, like GPT-4, Claude, and Gemini are capable of synthesis, multimodal reasoning, and contextual interaction. But try operationalizing them inside a business—and the seams show instantly.

These models are still monolithic. Their limitations aren’t bugs—they’re architectural constraints:

- Hallucination without audit trails

- No memory across sessions—no persistent identity

- No native ability to coordinate across tools or workflows

- No internal explainability—just post-hoc rationalization

RAG (retrieval-augmented generation) helps—but it’s a patch, not a platform. It’s a bolt-on context loader, not an architectural solution.

Early efforts to exploit the Foundation Models often fail to improve business, their errors can even make things worse. And even if you get it right… model drift will mutate your app over time.

The real breakthrough requires a reframing:

Models aren’t endpoints. They’re components.

We need to stop treating LLMs as the system—and start wiring them into systems. We’ve seen this movie before. Relational databases were a major business advance, and those vendors offered stored procedures and triggers to ‘add-on’ business logic to the database —but the business solution was the three-tier architecture separated data, logic, and interface. That separation unlocked the modern web.

AI needs the same decoupling.

RAIVEN TAKE-AWAY

Treat architectural

separation as a

feature.

Build for modularity now

to

preserve flexibility

later

5. The Evolution of AI Architecture: From Models to Ecosystems

AI’s evolution isn’t linear. It’s phasic—and it’s accelerating. In just five years, we’ve gone from raw models to dynamic ecosystems.

Let’s trace the emergence of AI ‘architecture’:

2019 – 2020: Foundations of Modularity

- Eric Drexler’s CAIS (Comprehensive AI Services) at Oxford: orchestration over monoliths.

- Google’s GShard and Switch Transformer: mixture-of-experts at scale.

Insight: Intelligence doesn’t need to be centralized. It can be distributed—and selectively activated.

2020 – 2021: Retrieval and Hybrid Reasoning

- Meta’s RAG: structured retrieval meets language generation.

- IBM’s neurosymbolic AI: perception plus logic.

- OpenAI’s CLIP, DeepMind’s Perceiver IO: multi-modal input channels.

Insight: Language models need grounding—facts, structure, and perceptual feedback.

2022 – 2023: Agents and Tool Use

- OpenAI’s function calling, Meta’s Toolformer

- Frameworks like AutoGPT, AutoGen, CrewAI

→ Planning, memory, tool invocation, and delegation

Insight: Language becomes the command interface. AI starts acting, not just responding.

2024: Protocol-Driven Coordination

- Google’s A2A Protocols: agent-to-agent memory and task negotiation.

- Anthropic’s MCP: reliable, secure communication between models and tools.

Insight: Protocols formalize interoperability. This isn’t just orchestration—it’s infrastructure.

The big shift?

We’re no longer scaling models. We’re building

systems—modular, persistent,

interoperable systems.

RAIVEN TAKE-AWAY

AI Architecture is no

longer

theoretical. It’s

live.

If you’re not building

toward it now, you’ll be

downstream from those

who

are.

Original frame from Disclosure (1994), directed by Barry Levinson. © Warner Bros. Entertainment Inc.. AI-assisted transformation using DALL·E 3, edited by James Baty. Used under fair use for artistic commentary, research and education.

6. Defining Agentic Architecture: From Models to Modular Systems

The defining shift in AI architecture is this:

From monolithic intelligence to modular agency.

Agentic architecture isn’t a product class—it’s a new layer in enterprise computing.

It reframes AI as an ecosystem of roles:

- Foundation models for reasoning and generation

- Protocols for memory, delegation, coordination

- Agents with task scope, persistent memory, tool access

- Orchestration layers that route and manage flow

Key Enablers:

- MCP (Model Context Protocol): Think “HTML for AI”—a standardized interface for prompt, memory, tools, and output.

- A2A (Agent-to-Agent): Task coordination protocols with memory sharing, retry logic, and dynamic delegation.

- Memory + Meta-Reasoning Layers: Long-term identity, cross-session memory, and internal optimization.

These aren’t UX features. They’re structural primitives.

We’re not building chatbots. We’re building persistent, collaborative, digital cognitive systems.

And the roots go deep—back to Drexler’s CAIS vision of composable AI services governed by orchestration logic. That theory is now real infrastructure—supported by open-spec alliances and proprietary stacks alike. While every foundation model vendor is pushing its own architecture—Anthropic’s Claude APIs, OpenAI’s tool use model, Google’s Gemini stack—the trajectory is clear: intelligence will be modular and composable.

A parallel advance is the Mixture of Experts (MoE) in deep learning, where inputs activate only the relevant sub-models rather than the entire network. Agentic systems extend this concept to the system level:

RAIVEN TAKE-AWAY

Architecture is the

interface. Structure is

the

product.

Design your AI stack

like

you’d design a business

operating system.

7. Agent Taxonomies and Systemic Composition

The era of “the chatbot” is over. We’re entering the age of cognitive teams—layered constellations of agents, each with defined roles, responsibilities, and memory.

What we’re seeing is the rise of composable intelligence . Not just a proliferation of agents—but systems that are designed to be built from agents.

Common emerging agent roles:

- Foundation Agents – Orchestrators that decompose tasks and manage delegation

- Specialist Agents – Domain-specific experts (legal, code, finance)

- Persistent Agents – Memory-aware collaborators that span sessions and contexts

Open directories like Awesome Agents, Mychaelangelo’s Agent Market Map, and AI Agents List are tracking hundreds of emerging agent types and stacks. But the key trend isn’t the number of agents—it’s the composability of systems. We’re seeing the shift from:

“Agents as apps” → “Agents as infrastructure primitives”

Just as the cloud turned compute into APIs, agentic AI is turning cognition into composable services. Modularity, not monoliths, will define the future of enterprise intelligence.

RAIVEN TAKE-AWAY

There are lots of types of Agents, and Agentic Systems… the key is to know how to leverage this new architecture for your business.

8. Autonomy: The Gradient Between Tools and Minds

Agentic AI introduces not just a new interface—but a spectrum of autonomy. And where your system sits on that spectrum determines what it can actually do.

Here’s a high-level breakdown:

| Level | Type | Description | Example |

|---|---|---|---|

| 5 | Meta-Cognitive Agent | Reflective, goal-revising, self-improving |

AGI

prototypes (research-only) |

| 4 | Autonomous Agent | Task-directed over time and context | Internal product manager agents |

| 3 | Semi-Autonomous Agent | Multi-step planning, memory-aware | AutoGPT-style agents |

| 2 | Reactive Agent | Context-aware, prompt-driven behavior | GPT-4 with tools |

| 1 | Scripted Agent | Deterministic flow, no adaptive logic | RPA bot, cron job |

| 0 | Tool | Stateless, single-function | SQL query, calculator |

This taxonomy is similar to agent autonomy levels models used by Microsoft, OpenAI, LangChain, and others. Most business-ready systems today cluster around Levels 2–4: adaptive, persistent, often proactive (e.g., Level 2 - GitHub Copilot’s ‘code suggestions’ Level 3 – Adept Agent Builder.)

But autonomy is not binary—it’s a design axis.

You don’t need Level 5 autonomy to start. But you do need to plan for upward mobility.

RAIVEN TAKE-AWAY

Architect for evolution.

Your Level 3 agent will

need

memory, reasoning, and

tool

chaining tomorrow.

Build flexibility in

today.

9. Agents and the Architecture of Augmented Humanity

This isn’t just about machines working with machines. It’s about rethinking how humans work— systems that can reason, remember, and collaborate.

A 2024

Stanford study made it clear:

Even when asked to consider downsides like job loss or reduced control, 46.1% of

workers still

favored AI automation. Why? The dominant reason—selected by 70%—was simple:

“To free up time for high-value work.”

Others cited:

- Repetitive tasks (46.6%)

- Stressful workflows (25.5%)

- Quality improvements (46.6%)

The study proposed a Human Agency Scale, with Level 3 – Moderate Collaboration as the sweet spot: humans retain oversight, while AI handles execution.

This is the core of the Fifth Industrial Revolution (5IR):

Not automation for replacement. Augmentation for elevation.

Agentic architecture makes this real—not just a philosophy, but an executable system design.

This isn’t AI-as-threat. It’s AI-as-exoskeleton—a structural extension of human capability.

RAIVEN TAKE-AWAY

Architect workflows where humans retain strategy, judgment, and direction—while agents own the repetition, integration, and optimization.

10. Agentic AI as the Platform for New Business Ecosystems

Every major tech wave created new platforms:

- PCs gave us Office.

- The CloudThe Cloud gave us Salesforce.

- Mobile Mobile gave us the App Store.

Agentic AI will give us something bigger:

Cognition-as-a-platform.

It’s already happening:

- Microsoft’s 2025 roadmap embeds agents across cloud, productivity, security, and dev environments.

- Mixture-of-Experts (MoE) is becoming a runtime default, intelligently routing requests to the best-suited model or tool.

- Orchestration stacks are fusing LLMs, vision models, databases, compilers, and simulators into dynamic pipelines.

What’s emerging isn’t just smarter software. It’s a new operating layer.

Agents aren’t apps. They’re runtime building blocks.

Operators won’t just

use them—they’ll

compose them.

Just like developers write functions and connect services, the next-gen enterprise will orchestrate intelligent teams of domain-specific agents—linked by memory, protocol, and observability.

RAIVEN TAKE-AWAY

Design for Human

Agency

Level 3—AI executes,

humans

decide

Target Agent Autonomy

Levels

2–4—context-aware,

memory-capable,

tool-using

Structure for

modularity—your future

lies

in orchestration, not

integration

That’s the architecture of an augmented enterprise. And it’s no longer theoretical. It’s the stack that will define who wins the next business cycle.

11. But it is a 'stack' or a 'cloud'?

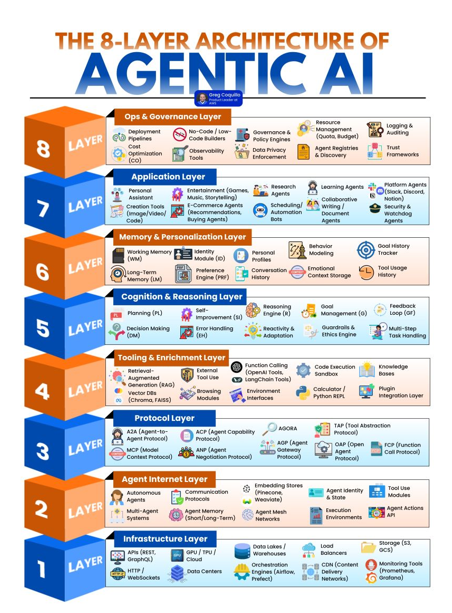

There types and perespectives of architecture diagrams. And certainly many have suggested classical ‘layered’ diagrams to describe Agentic AI. Like this one from Greg Coquillo at AWS…

Of course, architectural diagrams always represent select design and operational perspective. And thus others would suggest that multi-model, agentic AI architectures are better conceptualized as an ecosystem of autonomous services, similar to microservices centric cloud architecture....

And this is an archetypal IBM microservices cloud architecture (drawn with the IBM Cloud Architecture Diagram maker). This diagram emphasizes the multi-path execution communication, typical of such designs (and thus highlight design aspects similar to potential multi-model, agentic AI archtiectures)....

So, to recap, Phase 1 of the AI business revolution was essentially – pick an LLM and try to ‘use’ it - maybe RAG it or train an SLM (the past)

And the new Phase 2 of the AI business revolution is build or pick an ‘agent’ and select the subordinate associated model… the ‘Architecture’ phase (the now)

The next section will describe a rapidly emerging Phase 3, the currently hotly debated idea of vibe coding an ‘application’ on top of an agent/model stack (the future).

SECTION TWO: The Future Landscape

12. Software 3.0: When Language Becomes Architecture

Just months ago, Andrej Karpathy was still explaining Software 2.0—systems coded not by humans, but by training neural networks on data.

Now he’s moved the goalposts again. In a landmark 2025 talk at Y Combinator’s Startup School, Karpathydeclared the next shift:

Software 3.0 – You build software by talking to it.

This isn’t a metaphor. It’s a platform shift.

Your ops lead can now prototype tools.

Your customer can configure features.

Your investor can build a dashboard before the pitch ends.

"Your competition still sees a divide between technical and non-technical

users.

But everyone who speaks English can now build software."

— Andrej Karpathy

In this view, LLMs are the new operating systems, and agents, tool APIs, and context protocols are the new App Store. The developer hasn’t disappeared—but the monopoly on building has.

Karpathy’s advice to startups:

- Empower curiosity – Design for users who build by “vibe coding”

- Design for agents – Your users now include AI agents

- Architect around prompts – System prompts are no longer tuning knobs; they’re behavioral blueprints

Language is no longer just the interface. It’s the architecture..

And that changes everything.

RAIVEN TAKE-AWAY

Treat prompt design as product design. The system prompt is now the system spec

13. Diagraming Multi-agent, Multi-model architecture

Similar to cloud microservices architecture diagrams, the visual language of Agentic AI typically emphasizes collections of resrouces and multi-path communications...

From : Neil Shah Multi-Agentic Systems: A Comprehensive Guide

From : Rajeev Bhuvaneswaran / HTC Global Services, Agentic AI: The Future Of Autonomous Decision-Making

And thus the Agentic AI architecture is more appropriately viewed as a tooklit or collection of resources where the ‘Architecture’ is manifested by a combination of instructions at runtime (context engineering) and even autonomous decisions of the agents themselves.

14. Prompting as Architecture – The Rise of Context Engineering

In classical systems, architecture was physical: models, APIs, interfaces, logic gates. You built the machine and wired its logic.

In agentic systems, runtime behavior is shaped not by circuits—but by language.

The prompt is the Architecture:

This shift is already reshaping AI development. Prompts are no longer inputs—they’re dynamic control structures.

This is what Karpathy, Mellison, and others now call context engineering:

- A new design discipline where language governs execution

- Where retrieval, memory, tool use, and instruction are composable and programmable in real time

Prompt design is now system architecture, but it’s beyond just a mélange of system prompts, default system context, and prompt engineering – Context Engineering is all that and more.

Key prompt-based constructs:

- Role Prompts – Define identity, goals, and behavioral boundaries

→ “You are a legal reviewer. Your job is to minimize regulatory risk.” - Tool Invocation Prompts – Dictate when and how external tools should be triggered

- Orchestration Prompts – Chain multi-agent behavior into workflows

Emerging advanced layers:

- Meta-prompts – Self-reflective instructions for revising plans and strategies

- Task Decomposition– Breaking a goal into subtasks based on language structure

- Memory Shaping– Defining what to remember, forget, or prioritize across turns

Context Engineering isn’t UX. It’s execution logic.

“Context engineering is the art and science of filling the context window with just the right information at each step of an agent’s trajectory” (LangChain Blog)

The future won’t be built in Python.

It will be structured in English, versioned like infrastructure, and executed through agents.

RAIVEN TAKE-AWAY

If you're building systems with real autonomy, you need explainability built into the bones—not glued on after deployment.

15. Explainability, Alignment, and the Ethics of Infrastructure

We’ve crossed the threshold where explainability is no longer optional—it’s operational.

- The EU AI Act mandates transparency in all high-risk systems – Self-reflective instructions for revising plans and strategies

- NIST’s AI RMFdemands traceability and outcome monitoring

- The DoD’s Responsible AI Strategy requires auditability, oversight, and control

But most systems today are still opaque:

- They can’t explain decisions

- They fabricate rationales post hoc

- They can't trace where an answer came from

This isn’t just a governance failure—it’s an architectural failure.

You can’t align what you can’t inspect.

You can’t inspect what you didn’t structure.

Agentic architectures offer a way forward. With modular, role-scoped agents—each with logging, decision boundaries, and memory—you get inspectable behavior by design.

Alignment isn’t a tuning problem. It’s a system design problem.

RAIVEN TAKE-AWAY

FOR

BUILDERS:

Treat prompts as code modules—testable, modular, and evolvable.

RAIVEN TAKE-AWAY

FOR ORGANIZATIONS:

Treat prompt design as product design. The system prompt is now the system spec

Key enablers of ethical AI infrastructure:

- Token-Level Provenance – Trace where each word came from: memory, tool, or prompt

- Hierarchical Transparency – Understand which agent made what decision, and why

- Dynamic Governance Protocols – Escalate uncertain cases to humans by default

Alignment strategies must move from declarations to enforcement layers. That’s what agentic architecture enables.

16. Strategic Outlook: 2025–2030

The next five years will not be defined by model size. They will be defined by infrastructure intelligence—how well your systems are structured, orchestrated, and governable.

Four trajectories are already clear:

- Operational AI –Agents managing entire workflows: from data to decision to action

- Agentic Orchestration Platforms – Think Zapier + Kubernetes for cognitive systems

- XAI-Ready Stacks – Explainable by design, not by apology

- Protocol Standardization – MCP, A2A, and memory contracts forming the TCP/IP of intelligent software

Winners will be those who:

- Build for agents

- Build with agents

- Plug into a networked, modular, protocol-native ecosystem

RAIVEN TAKE-AWAY

FOR

BUILDERS:

Design for agent collaboration and modular tool catalogs—not isolated apps

RAIVEN TAKE-AWAY

FOR INVESTORS:

Ask the architectural questions:

- Is it agent-native or model-bound?

- Is it protocol-aligned or siloed?

- Is it interoperable or brittle?

This is the end of closed AI silos.

The Agentic Era is networked. Composable. Aligned.And it’s arriving faster than legacy platforms can adapt.

Original frame from Iron Man 2 (2010), directed by Jon Favreau. © Marvel Studios (Walt Disney Company), distributed by Paramount Pictures. AI-assisted transformation using DALL·E 3, edited by James Baty. Used under fair use for artistic commentary, research and education.

17. Beyond Business: AGI, ASI, and Civilizational Risk

Some agentic architectures won’t drive business outcomes—they’ll define the future of cognition itself.

Leaders like Sergey Brin and Demis Hassabis are chasing AGI via Gemini and DeepMind AlphaCode 2. Others, like Ilya Sutskever at Safe Superintelligence Inc., are racing to build AGI-safe architectures from first principles.

But here’s the key

Alignment doesn’t begin at AGI. It begins now.

Every agent you deploy today—

- That lacks explainability

- That can’t be audited

- That ignores constraints

— is a structural risk vector.

The danger isn’t just a rogue AGI tomorrow. It’s ungoverned complexity today.

Agentic architecture doesn’t solve alignment—but it’s the only substrate on which real alignment strategies can operate.

- Memory boundaries

- Execution constraints

- Agent verification layers

- Delegation rules

- Human escalation triggers

These aren’t feature requests.

They’re civilizational guardrails.

RAIVEN TAKE-AWAY

We don’t need

smarter machines. We need knowable ones.

And we need them before the scale tips past visibility

Conclusion: Architecture Is Destiny

Every major technology leap becomes real not when the core tech matures, but when the architecture makes it usable, scalable, and safe.

- HTML didn’t invent the web—it made it inevitable

- Docker didn’t invent the cloud—it made it repeatable

- The App Store didn’t invent mobile—it made it industrial

AI is now standing on that same edge.

The next five years will not be won by larger models. They’ll be won by:

- Protocols like MCP and A2A

- Persistent memory and composable agents

- Prompt-based systems that act, reason, and adapt

This is what agentic architecture unlocks:

- Systems that don’t just respond—but collaborate

- That don’t just predict—but participate

Whether AI becomes general-purpose business infrastructure—or collapses under brittle demos—depends entirely on what we build now.

The chasm is real.

The bridge is architecture.

And the time to cross is now.

RAIVEN TAKE-AWAY

Whether you are a multinational conglomerate or a two-person startup, having an ‘AI strategy’ is a must. AI-Augmented, AI-Transformed, or AI-Native… just don’t be AI-Absent

But be strategic. Consider the long-term architecture

Ready to go? Wait!!

The same super-advocates of the vibe-coding, context engineering future warn of the large risks in piloting these new systems…

Karpathy….

- yells at the YCombinator startup students “But everyone who speaks English can now build software!"

- elsewhere cautions vigilant oversight, as he “Warns Against Unleashing Unsupervised Agents Too Soon: Keep AI On the Leash!!”

- “(LLMs), though impressive, are still fundamentally flawed and unreliable without human oversight”

Debugging AI and the 70% rule

A survey of 500 software engineering leaders shows that although nearly all (95%+) believe AI tools can reduce burnout, 59% say AI tools are causing deployment errors "at least half the time." Consequently, 67% now "spend more time debugging AI-generated code," with 68% also dedicating more effort to resolving AI-related security vulnerabilities.

Much AI-generated code isn't fully baked. As Peter Yang notably observed, "it can get you 70% of the way there, but that last 30% is frustrating," often creating "new bugs, issues," and requiring continued expert oversight.

TurinTech emphasizes that "AI-assisted development tools" promise speed and productivity, yet new research—including their recent paper, Language Models for Code Optimization —shows "AI-generated code is increasing the burden on development teams."

See the Stack Overflow 2024 Developer Strategy survey rankings for business use of AI.

While attention has focused on the risks of AI-generated code, there's also explosive growth in using AI to test existing codebases.

The Exploding Focus on AI-Enabled Testing

AI-enhanced testing of existing human-developed code has rapidly emerged as a strategic investment, backed by authoritative experts and compelling data from industry leaders.

| Expert / Source | Benefit | AI Technique | Example |

|---|---|---|---|

| Martin Fowler (TW) | Better bug coverage | AI-generated edge cases, test assist | TW CI/CD projects |

| Diego Lo Giudice (Forrester) | Lower test maintenance | Predictive test selection, anomaly detect | Forrester enterprise case studies |

| IDC (2024) | Tighter regressions, less waste | Defect prediction, test prioritization | Netflix "Test Advisory", Meta "Sapienz" |

AI-powered testing is now a risk-smart first move—fortifying human code

before replacing it.

RAIVEN TAKE-AWAY

MULTI-MODEL AGENTIC AI IS A KEY EMERGING ARCHITECTURE THAT ENABLES MORE BUSINESS USE OF AI. AI IS THE NEXT BIG ARCHITECTURE SHIFT AND THAT ENABLES RAPID TRANSFORMATION

BUT, AS THE TOOLS ARE ONLY EMERGING, AND A CAUTIOUS STRATEGY CAN BE CRITICAL TO MEASURED SUSTAINABLE GAINS.

INVEST IN ARCHITECTURE : MODULAR SYSTEMS WORK BEST WITH LLM DRIVEN GENERATION.

TRACK THE DATA : BENCHMARK PRODUCTIVITY VERSUS BUG/SECURITY LOAD TO AVOID FALSE GAIN

PILOT, DON'T REPLACE : USE AI FOR GRUNT TASKS, PROTOTYPING, AND CODE SUGGESTIONS—NOT FULL REWRITES.

ENFORCE REVIEW & TESTING: PAIR WITH RIGOROUS CODE REVIEWS, SECURITY SCANS, AND HUMAN CHECKS, ESPECIALLY ON COMPLEX SECTIONS.